macOS Dynamic Desktop

Dark Mode is one of the most popular additions to macOS — especially among us developer types, who tend towards light-on-dark color themes in text editors and appreciate this new visual consistency across the system.

A couple of years back, there was similar fanfare for Night Shift, which helped to reduce eye strain from hacking late into the night (or early in the morning, as it were).

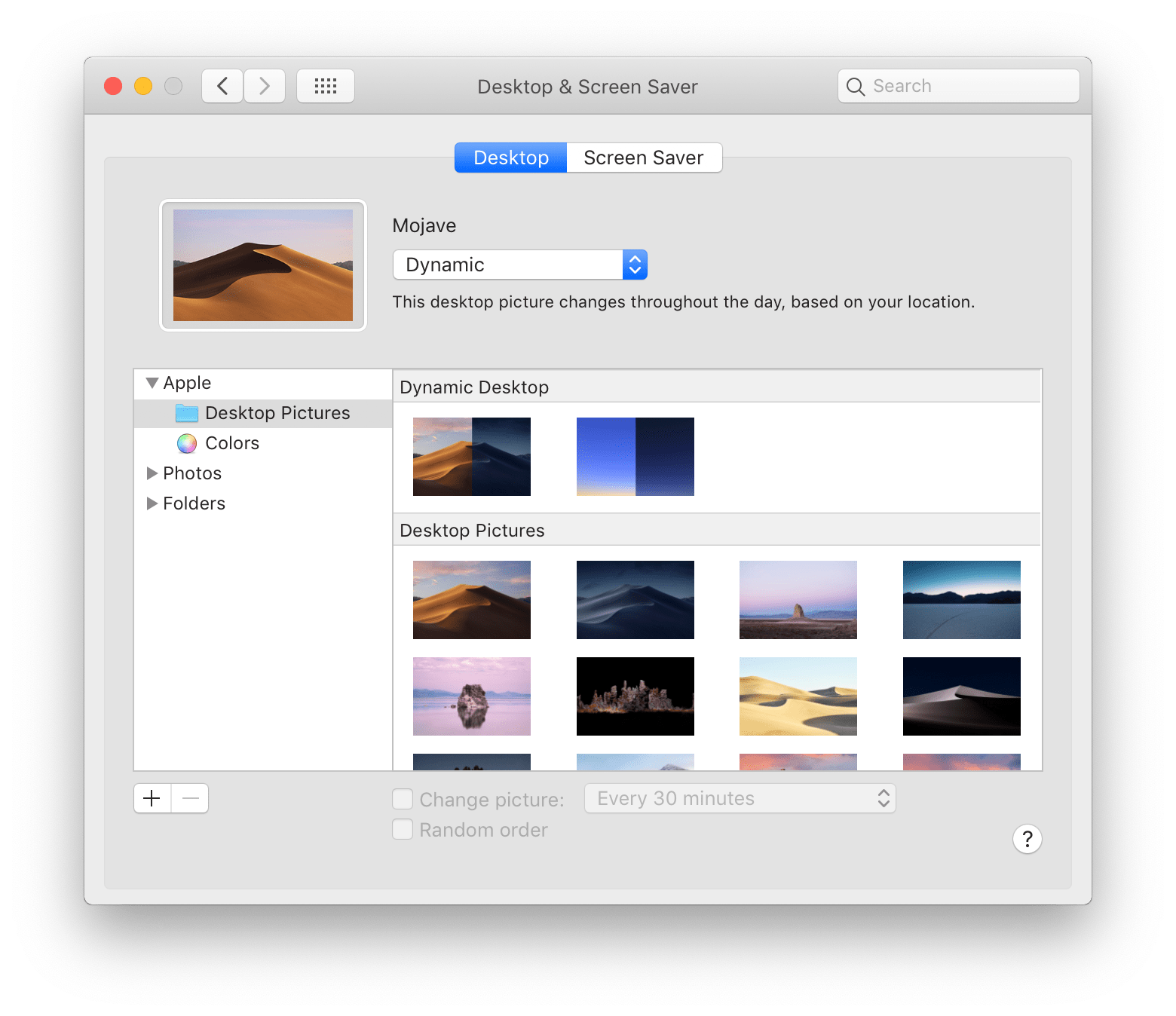

If you triangulate from those two macOS features, you get Dynamic Desktops, also new in Mojave. Now when you go to “System Preferences > Desktop & Screen Saver”, you have the option to select a “Dynamic” desktop picture that changes throughout the day, based on your location.

The result is subtle and delightful. Having a background that tracks the passage of time makes the desktop feel alive; in tune with the natural world. (If nothing else, it makes for a lovely visual effect when switching dark mode on and off)

But how does it work, exactly?

That’s the question for this week’s NSHipster article.

The answer involves a deep dive into image formats, a little bit of reverse-engineering and even some spherical trigonometry.

The first step to understanding how Dynamic Desktop works is to get hold of a dynamic image.

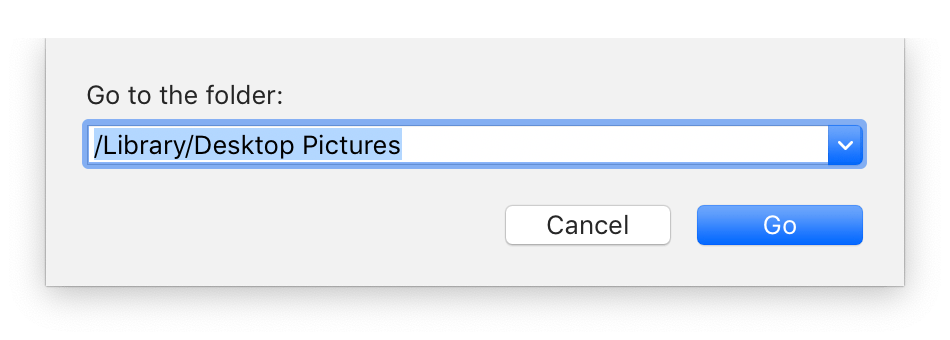

If you’re running macOS Mojave open Finder, select “Go > Go to Folder…” (⇧⌘G), and enter “/Library/Desktop Pictures/”.

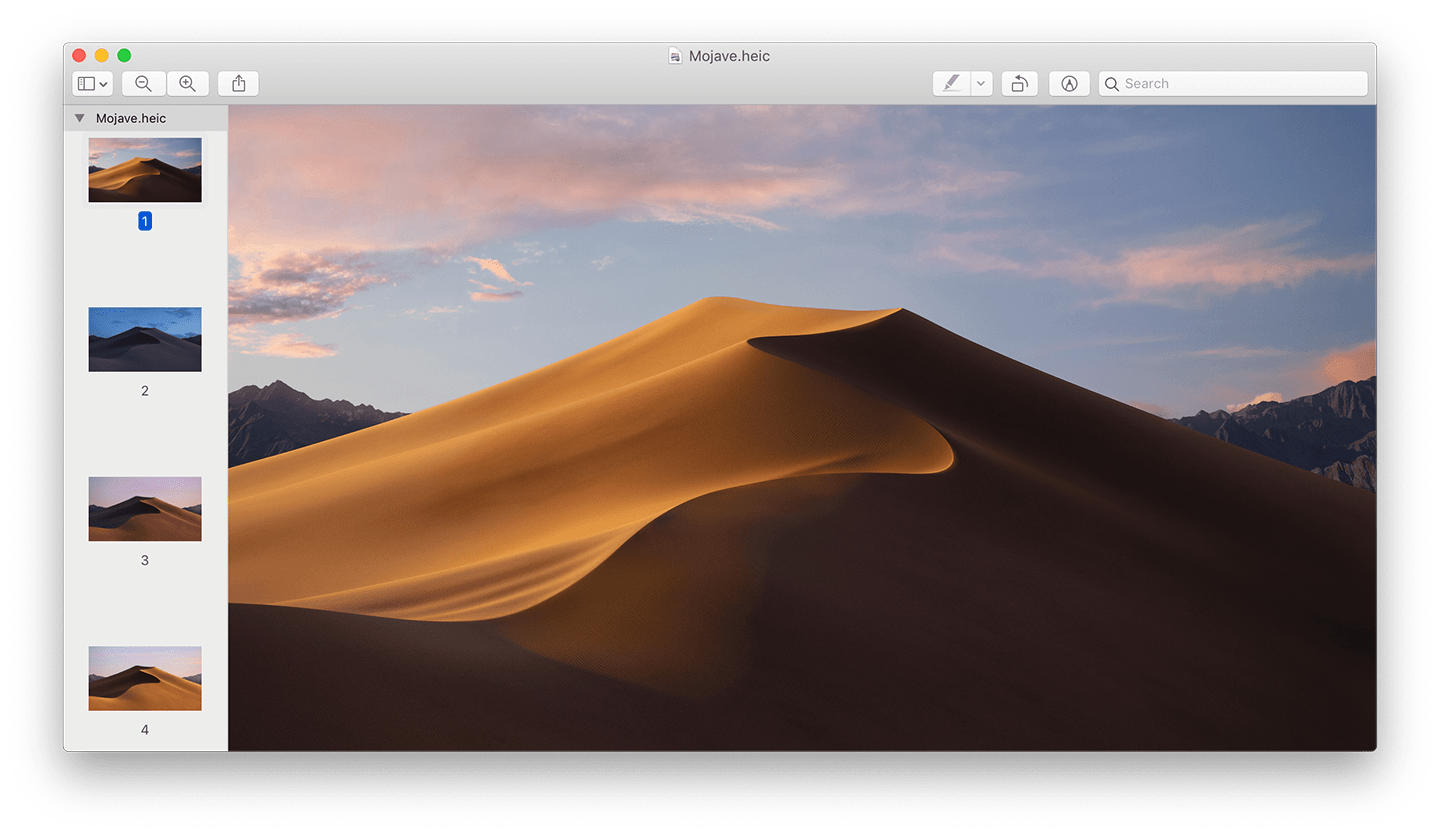

In this directory, you should find a file named “Mojave.heic”. Double-click it to open it in Preview.

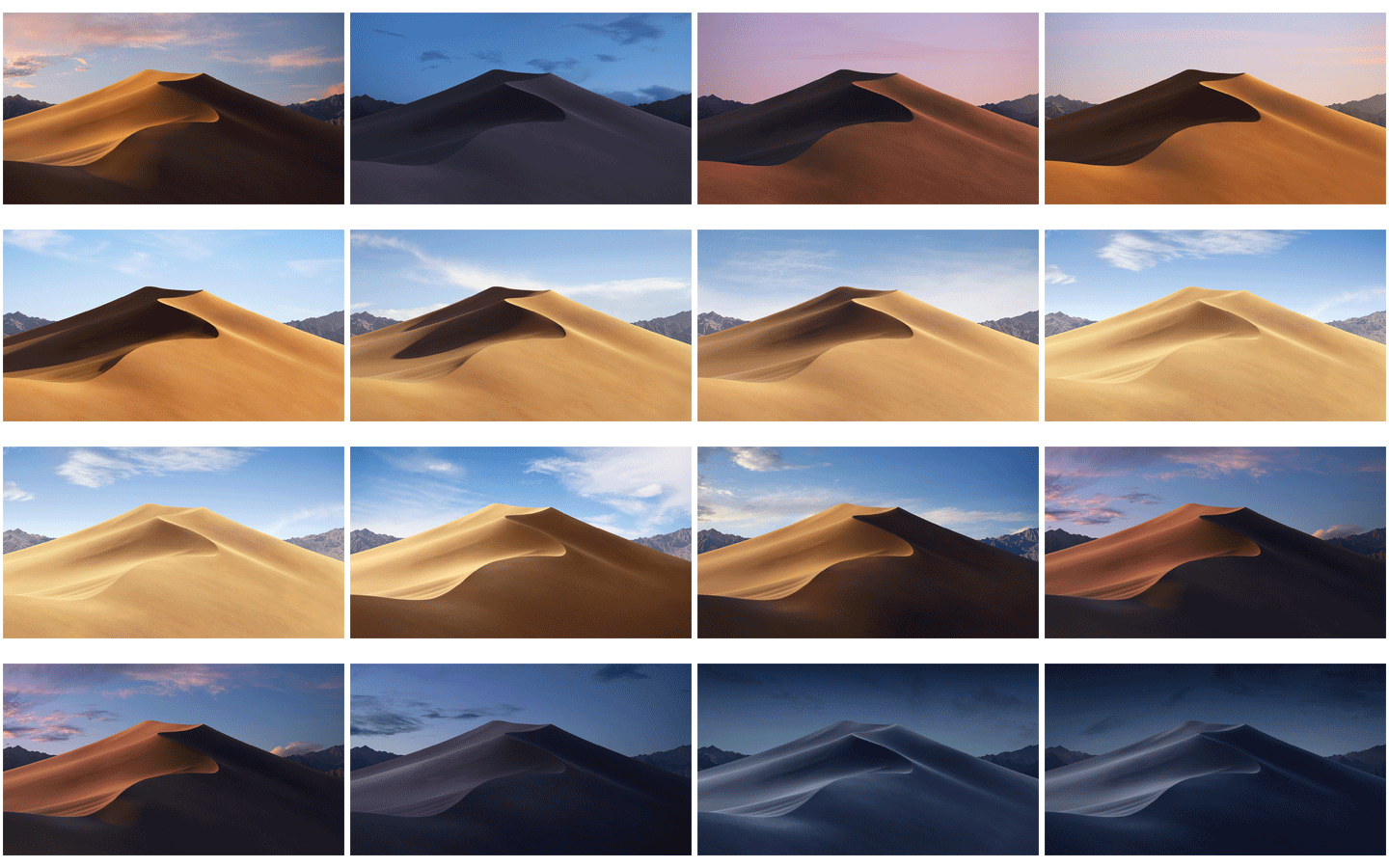

In Preview, the sidebar shows a list of thumbnails numbered 1 through 16, each showing a different view of the desert scene.

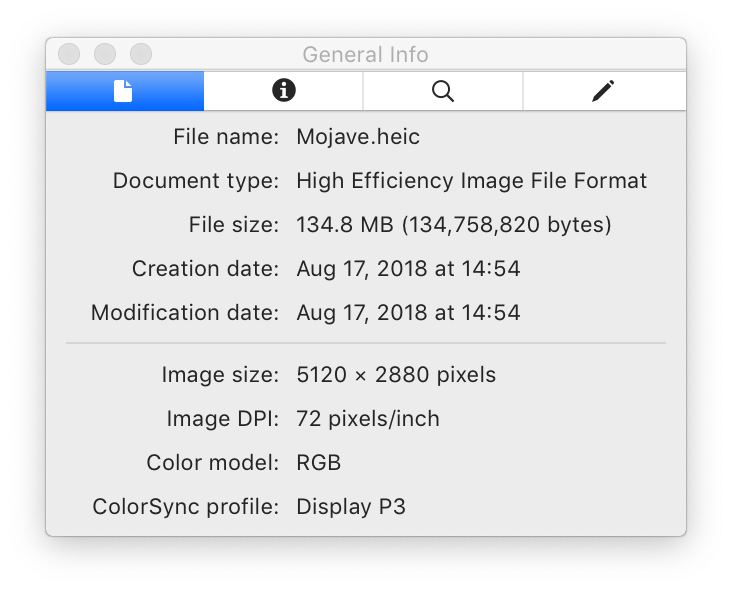

If we select “Tools > Show Inspector” (⌘I), we get some general information about what we’re looking at:

Unfortunately, that’s about all Preview gives us (at least at the time of writing). If we click on the next panel over, “More Info Inspector”, we don’t learn a whole lot more about our subject:

| Color Model | RGB |

| Depth: | 8 |

| Pixel Height | 2,880 |

| Pixel Width | 5,120 |

| Profile Name | Display P3 |

If we want to learn more, we’ll need to roll up our sleeves and get our hands dirty with some low-level APIs.

Digging Deeper with CoreGraphics

Let’s start our investigation by creating a new Xcode Playground. For simplicity, we can hard-code a URL to the “Mojave.heic” file on our system.

import Foundation

import CoreNext, create a CGImage,

copy its metadata,

and enumerate over each of its tags:

let source = CGImageWhen we run this code, we get two results:

has, which has a value of "True",

and solar, which has a decidedly less understandable value:

YnShining Light on Solar

Most of us would look at that wall of text and quietly close the lid of our MacBook Pro. But, as some of you surely noticed, this text looks an awful lot like it’s Base64-encoded.

Let’s test out our hypothesis in code:

if name == "solar" {

let data = Data(base64Encoded: value)!

print(String(data: data, encoding: .ascii))

}

bplist00Ò\u{01}\u{02}\u{03}...

What’s that?

bplist, followed by a bunch of garbled nonsense?

By golly, that’s the file signature for a binary property list.

Let’s see if Property can make any sense of it…

if name == "solar" {

let data = Data(base64Encoded: value)!

let property(

ap = {

d = 15;

l = 0;

};

si = (

{

a = "-0.3427528387535028";

i = 0;

z = "270.9334057827345";

},

...

{

a = "-38.04743388682423";

i = 15;

z = "53.50908581251309";

}

)

)

Now we’re talking!

We have two top-level keys:

The ap key corresponds to

a dictionary containing integers for the d and l keys.

The si key corresponds to

an array of dictionaries with integer and floating-point values.

Of the nested dictionary keys,

i is the easiest to understand:

incrementing from 0 to 15,

they’re the index of the image in the sequence.

It’d be hard to guess a and z without any additional information,

but they turn out to represent the altitude (a) and azimuth (z)

of the sun in the corresponding pictures.

Calculating Solar Position

At the time of writing, those of us in the northern hemisphere are settling into the season of autumn and its shorter, colder days, whereas those of us in the southern hemisphere are gearing up for hotter and longer days. The changing of the seasons reminds us that the duration of a solar day depends where you are on the planet and where the planet is in its orbit around the sun.

The good news is that astronomers can tell you — with perfect accuracy — where the sun is in the sky for any location or time. The bad news is that the necessary calculations are complicated to say the least.

Honestly, we don’t really understand it ourselves, and are pretty much just porting whatever code we manage to find online. After some trial and error, we were able to arrive at something that seems to work (PRs welcome!):

import Foundation

import CoreSolar Position on Oct 1, 2018 at 12:00 180.73470025840783° Az / 49.27482549913847° El

At noon on October 1, 2018, the sun shines on Apple Park from the south, about halfway between the horizon and directly overhead.

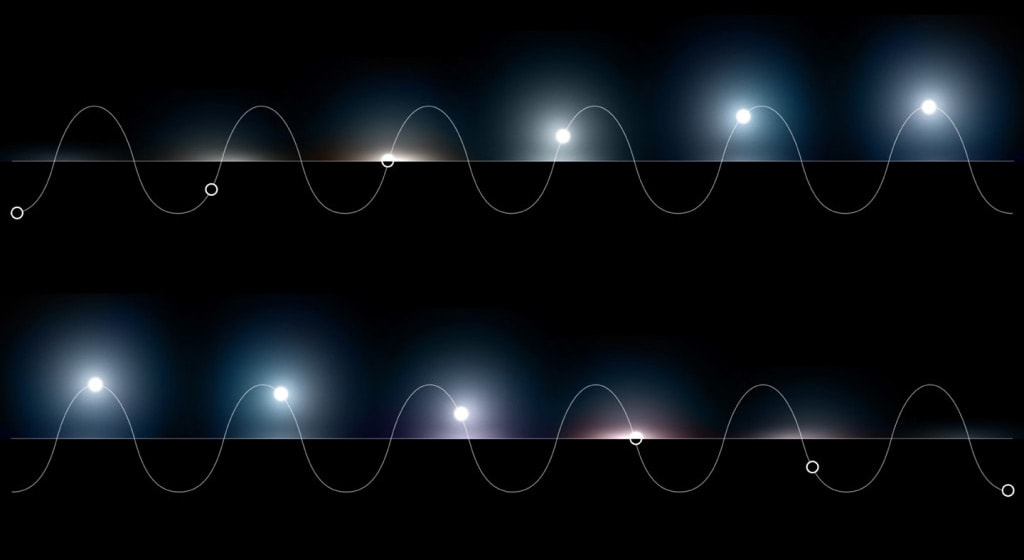

If track the position of the sun over an entire day, we get a sinusoidal shape reminiscent of the Apple Watch “Solar” face.

Extending Our Understanding of XMP

Alright, enough astronomy for the moment. Let’s ground ourselves in something much more banal: de facto XML metadata standards.

Remember the has metadata key from before?

Yeah, that.

XMP, or Extensible Metadata Platform, is a standard format for tagging files with metadata. What does XMP look like? Brace yourself:

let xmp<x:xmpmeta xmlns:x="adobe:ns:meta/" x:xmptk="XMP Core 5.4.0">

<rdf:RDF xmlns:rdf="http://www.w3.org/1999/02/22-rdf-syntax-ns#">

<rdf:Description rdf:about=""

xmlns:apple_desktop="http://ns.apple.com/namespace/1.0/">

<apple_desktop:solar>

<!-- (Base64-Encoded Metadata) -->

</apple_desktop:solar>

</rdf:Description>

</rdf:RDF>

</x:xmpmeta>

Yuck.

But it’s a good thing that we checked.

We’ll need to honor that apple_desktop namespace

to make our own Dynamic Desktop images work correctly.

Speaking of, let’s get started on that.

Creating Our Own Dynamic Desktop

Let’s create a data model to represent a Dynamic Desktop:

struct DynamicEach Dynamic Desktop comprises an ordered sequence of images,

each of which has image data, stored in a CGImage object,

and metadata, as discussed before.

We adopt Codable in the Metadata declaration

in order for the compiler to automatically synthesize conformance.

We’ll take advantage of that when it comes time

to generate the Base64-encoded binary property list.

Writing to an Image Destination

First, create a CGImage

with a specified output URL.

The file type is heic and the source count

is equal to the number of images to be included.

guard let imageNext, enumerate over each image in the dynamic desktop object.

By using the enumerated() method,

we also get the current index for each loop

so that we can set the image metadata on the first image:

for (index, image) in dynamicAside from the unrefined nature of Core Graphics APIs,

the code is pretty straightforward.

The only part that requires further explanation is the call to

CGImage.

Because of a mismatch between how image and container metadata are structured

and how they’re represented in code,

we have to implement Encodable for Dynamic ourselves:

extension DynamicWith that in place,

we can implement the aforementioned base64Encoded method like so:

extension DynamicOnce the for-in loop is exhausted,

and all images and metadata are written,

we call CGImage to finalize the image source

and write the image to disk.

guard CGImageIf everything worked as expected, you should now be the proud owner of a brand new Dynamic Desktop. Nice!

We love the Dynamic Desktop feature in Mojave, and are excited to see the same proliferation of them that we saw when wallpapers hit the mainstream with Windows 95.

If you’re so inclined, here are a few ideas for where to go from here:

Automatically Generating a Dynamic Desktop from Photos

It’s mind-blowing to think that something as transcendent as the movement of celestial bodies can be reduced to a system of equations with two inputs: time and place.

In the example before, this information is hard-coded, but you could ostensibly extract that information from images automatically.

By default, the camera on most phones captures Exif metadata each time a photo is snapped. This metadata can include the time which the photo was taken and the GPS coordinates of the device at the time.

By reading time and location information directly from image metadata, you can automatically determine solar position and simplify the process of generating a Dynamic Desktop from a series of photos.

Shooting a Time Lapse on Your iPhone

Want to put your new iPhone XS to good use? (Or more accurately, “Want to use your old iPhone for something productive while you procrastinate selling it?”)

Mount your phone against a window, plug it into a charger, set the Camera to Timelapse mode, and hit the “Record” button. By extracting key frames from the resulting video, you can make your very own bespoke Dynamic Desktop.

You might also want to check out Skyflow or similar apps that more easily allow you to take still photos at predefined intervals.

Generating Landscapes from GIS Data

If you can’t stand to be away from your phone for an entire day (sad) or don’t have anything remarkable to look (also sad), you could always create your own reality (sounds sadder than it is).

Using an app like Terragen, you can render photo-realistic 3D landscapes, with fine-tuned control over the earth, sun, and sky.

You can make it even easier for yourself by downloading an elevation map from the U.S. Geological Survey’s National Map website and using that as a template for your 3D rendering project.

Downloading Pre-Made Dynamic Desktops

Or if you have actual work to do and can’t be bothered to spend your time making pretty pictures, you can always just pay someone else to do it for you.

We’re personally fans of the the 24 Hour Wallpaper app. If you have any other recommendations, @ us on Twitter!.