iOS 13

Apple announced a lot at WWDC this year. During the conference and the days that followed, a lot of us walked around in a daze, attempting to recover from have our minds “hashtag-mindblown’d” (#🤯). But now a few months later, after everything announced has launched (well, almost everything) those very same keynote announcements now elicit far different reactions:

Although the lion’s share of attention has been showered on the aforementioned features, not nearly enough coverage has been given to the rest of iOS 13 — and that’s a shame, because this release is among the most exciting in terms of new functionality and satisfying in terms of improving existing functionality.

So to mark last week’s release of iOS 13, we’re taking a look at some obscure (largely undocumented) APIs that you can now use in your apps. We’ve scavenged the best bits out of the iOS 13 Release Notes API diffs, and now present them to you.

Here are some of our favorite things you can do starting in iOS 13:

Generate Rich Representations of URLs

New in iOS 13, the LinkPresentation framework provides a convenient, built-in way to replicate the rich previews of URLs you see in Messages. If your app has any kind of chat or messaging functionality, you’ll definitely want to check this out.

Rich previews of URLs have a rich history going at least as far back as the early ’00s, with the spread of Microformats by semantic web pioneers, and early precursors to Digg and Reddit using khtml2png to generate thumbnail images of web pages. Fast forward to 2010, with the rise of social media and user-generated content, when Facebook created the OpenGraph protocol to allow web publishers to customize how their pages looked when posted on the Newsfeed.

These days,

most websites reliably have OpenGraph <meta> tags on their site

that provide a summary of their content for

social networks, search engines, and anywhere else that links are trafficked.

For example,

here’s what you would see if you did “View Source” for this very webpage:

<meta property="og:site_name" content="NSHipster" />

<meta property="og:image" content="https://nshipster.com/logo.png" />

<meta property="og:type" content="article" />

<meta property="og:title" content="iIf you wanted to consume this information in your app,

you can now use the LinkPresentation framework’s LPMetadata class

to fetch the metadata and optionally construct a representation:

import LinkAfter setting appropriate constraints

(and perhaps a call to size),

you’ll get the following,

which the user can tap to preview the linked webpage:

Alternatively,

if you already have the metadata in-app,

or otherwise can’t or don’t want to fetch remotely,

you can construct an LPLink directly:

let metadata = LPLinkPerform On-Device Speech Recognition

SFSpeech

gets a major upgrade in iOS 13 —

most notably for its added support for on-device speech recognition.

Previously, transcription required an internet connection and was restricted to a maximum of 1-minute duration with daily limits for requests. But now, you can do speech recognition completely on-device and offline, with no limitations. The only caveats are that offline transcription isn’t as good as what you’d get with a server connection, and is only available for certain languages.

To determine whether offline transcription is available for the user’s locale,

check the SFSpeech property

supports.

At the time of publication, the list of supported languages are as follows:

- English

- United States (

en-US) - Canada (

en-CA) - Great Britain (

en-GB) - India (

en-IN) - Spanish

- United States (

es-US) - Mexico (

es-MX) - Spain (

es-ES) - Italian

- Italy (

it-IT) - Portuguese

- Brazil (

pt-BR) - Russian

- Russia (

ru-RU) - Turkish

- Turkey (

tr-TR) - Chinese

- Mandarin (

zh-cmn) - Cantonese (

zh-yue)

But that’s not all for speech recognition in iOS 13!

SFSpeech now provides information including

speaking rate and average pause duration,

as well as voice analytics features like

jitter (variations in pitch) and

shimmer (variations in amplitude).

import Speech

guard SFSpeechInformation about pitch and voicing and other features could be used by your app (perhaps in coordination with CoreML) to differentiate between speakers or determine subtext from a speaker’s inflection.

Send and Receive Web Socket Messages

Speaking of the Foundation URL Loading System, we now have native support for something that’s been at the top of our wish list for many years: web sockets.

Thanks to the new

URLSession class

in iOS 13,

you can now incorporate real-time communications in your app

as easily and reliably as sending HTTP requests —

all without any third-party library or framework:

let url = URL(string: "wss://…")!

let webFor years now, networking has been probably the fastest-moving part of the whole Apple technology stack. Each WWDC, there’s so much to talk about that that they routinely have to break their content across two separate sessions. 2019 was no exception, and we highly recommend that you take some time to check out this year’s “Advances in Networking” sessions (Part 1, Part 2).

Do More With Maps

MapKit is another part of Apple SDKs that’s consistently had a strong showing at WWDC year after year. And it’s often the small touches that make the most impact in our day-to-day.

For instance,

the new

MKMap API

in iOS 13

makes it much easier to constrain a map’s viewport to a particular region

without locking it down completely.

let region = MKCoordinateAnd with the new

MKPoint API,

you can now customize the appearance of map views

to show only certain kinds of points of interest

(whereas previously it was an all-or-nothing proposition).

let filter = MKPointFinally,

with MKGeo,

we now have a built-in way to pull in GeoJSON shapes

from web services and other data sources.

let decoder = MKGeoKeep Promises in JavaScript

If you enjoyed our article about JavaScriptCore,

you’d be thrilled to know that JSValue objects

now natively support promises.

For the uninitiated:

in JavaScript, a Promise

is an object that represents the eventual completion (or rejection)

of an asynchronous operation

and its resulting value.

Promises are a mainstay of modern JS development —

perhaps most notably within the fetch API.

Another addition to JavaScriptCore in iOS 13

is support for symbols

(no, not those symbols).

For more information about

init(new,

refer to the docs

just guess how to use it.

Respond to Objective-C Associated Objects (?)

On a lark, we decided to see if there was anything new in Objective-C this year and were surprised to find out about objc_setHook_setAssociatedObject. Again, we don’t have much to go on except the declaration, but it looks like you can now configure a block to execute when an associated object is set. For anyone still deep in the guts of the Objective-C runtime, this sounds like it could be handy.

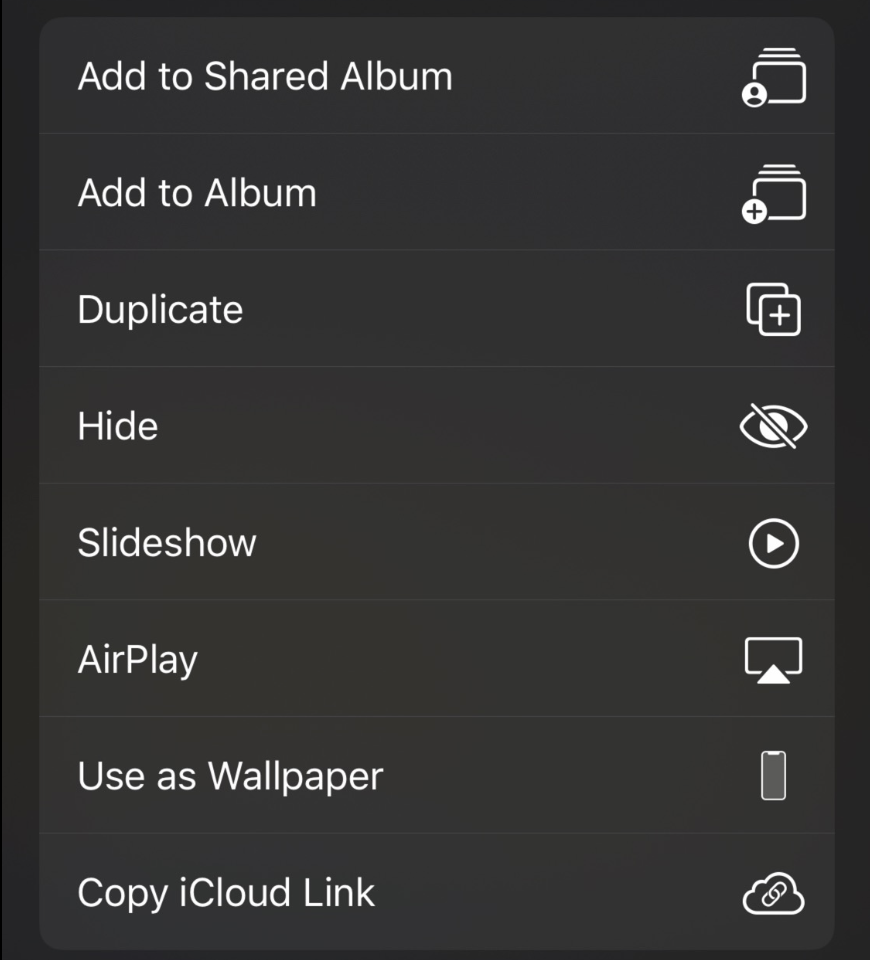

Tame Activity Items (?)

On the subject of missing docs:

UIActivity

seems like a compelling option for managing

actions in the new iOS 13 share sheets,

but we don’t really know where to start…

Shame that we don’t have the information we need to take advantage of this yet.

Format Lists and Relative Times

As discussed in a previous article,

iOS 13 brings two new formatters to Foundation:

List

and

Relative.

Not to harp on about this, but both of them are still undocumented, so if you want to learn more, we’d recommend checking out that article from July. Or, if you’re in a rush here’s a quick example demonstrating how to use both of them together:

import Foundation

let relativeTrack the Progress of Enqueued Operations

Starting in iOS 13,

Operation now has a

progress property.

Granted,

(NS)Progress

objects aren’t the most straightforward or convenient things to work with

(we’ve been meaning to write an article about them at some point),

but they have a complete and well-considered API,

and even have some convenient slots in app frameworks.

For example,

check out how easy it is to wire up a UIProgress

to display the live-updating progress of an operation queue

by way of its observed property:

import UIKit

fileprivate class DownloadIt’s also worth mentioning a few other APIs coming to in 13,

like

schedule(after:interval:tolerance:options:_:),

which clues Operation into the new

Combine framework

in a nice way,

and add,

which presumably works like

Dispatch barrier blocks

(though without documentation, it’s anyone’s guess).

Manage Background Tasks with Ease

One of the things that often differentiates category-defining apps from their competitors is their use of background tasks to make sure the app is fully synced and updated for the next time it enters the foreground.

iOS 7 was the first release to provide an official API for scheduling background tasks (though developers had employed various creative approaches prior to this). But in the intervening years, multiple factors — from an increase in iOS app capabilities and complexity to growing emphasis in performance, efficiency, and privacy for apps — have created a need for a more comprehensive solution.

That solution came to iOS 13 by way of the new BackgroundTasks framework.

As described in this year’s WWDC session “Advances in App Background Execution”, the framework distinguishes between two broad classes of background tasks:

- app refresh tasks: short-lived tasks that keep an app up-to-date throughout the day

- background processing tasks: long-lived tasks for performing deferrable maintenance tasks

The WWDC session and the accompanying sample code project do a great job of explaining how to incorporate both of these into your app. But if you want the quick gist of it, here’s a small example of an app that schedules periodic refreshes from a web server:

import UIKit

import BackgroundAnnotate Text Content Types for Better Accessibility

You know how frustrating it is to hear some people read out URLs? (“eɪʧ ti ti pi ˈkoʊlən slæʃ slæʃ ˈdʌbəlju ˈdʌbəlju ˈdʌbəlju dɑt”…) That’s what it can be like when VoiceOver tries to read something without knowing more about what it’s reading.

iOS 13 promises to improve the situation considerably

with the new accessibility property

and UIAccessibility NSAttributed attribute key.

Whenever possible,

be sure to annotate views and attributed strings with

the constant that best describes the kind of text being displayed:

UIAccessibilityTextual Context Console UIAccessibilityTextual Context File System UIAccessibilityTextual Context Messaging UIAccessibilityTextual Context Narrative UIAccessibilityTextual Context Source Code UIAccessibilityTextual Context Spreadsheet UIAccessibilityTextual Context Word Processing

Remove Implicitly Unwrapped Optionals from View Controllers Initialized from Storyboards

SwiftUI may have signaled the eventual end of Storyboards, but that doesn’t mean that things aren’t and won’t continue to get better until if / when that day comes.

One of the most irritating anti-patterns for Swift purists when working on iOS projects with Storyboards has been view controller initialization. Due to an impedance mismatch between Interface Builder’s “prepare for segues” approach and Swift’s object initialization rules, we frequently had to choose between making all of our properties non-private, variable, and (implicitly unwrapped) optionals, or foregoing Storyboards entirely.

Xcode 11 and iOS 13 allow these paradigms to reconcile their differences

by way of the new @IBSegue attribute

and some new UIStoryboard class methods:

First,

the @IBSegue attribute

can be applied view controller method declarations

to designate itself as the API responsible for

creating a segue’s destination view controller

(i.e. the destination property of the segue parameter

in the prepare(for:sender:) method).

@IBSegueSecond,

the UIStoryboard class methods

instantiate

and instantiate

offer a convenient block-based customization point for

instantiating a Storyboard’s view controllers.

import UIKit

struct Person { … }

class ProfileTogether with the new UIKit Scene APIs, iOS 13 gives us a lot to work with as we wait for SwiftUI to mature and stabilize.

That does it for our round-up of iOS 13 features that you may have missed. But rest assured — we’re planning to cover many more new APIs in future NSHipster articles.

If there’s anything we missed that you’d like for us to cover, please @ us on Twitter!